Adaptive image caching based on network speed with Workbox.js

Progressive Web Apps seems to be the latest buzzword - the term refers to applications that are non-native (not explicitly written for Android / iOS devices using their programming languages), but instead, they are web applications that have "mobile" like features. These features include offline capabilities - meaning that data for a given web app should be available to users even when they are offline.

Special thanks to Jeff Posnick for his support creating the sample application as well as reviewing this article.

In this article, we'll walk through the process of creating a web application that works offline as well as making sure that image assets are cached for the application based on the Network Speed.

In a previous article we have already discussed how to Adaptively load images based on network speed. If you'd like to get familiar with this concept, please make sure you read that entire post thoroughly.

Get the code and see the app

If you'd like to see the application please visit https://workbox-cnofarfszr.now.sh/ (best viewed with Google Chrome). The application has all the required functionality - open up Chrome DevTools to see some output from Workbox. You can also bring the application offline to test it's offline functionality.

The source code found in this article can be accessed on GitHub: https://github.com/tpiros/cloudinary-workbox-example.

Please note that if you are trying to run the application on your local envirnment, you need to launch the application on

localhostand not on an IP address nor127.0.0.1, otherwise it will not function.This is due to the fact that we either need to serve the application on

httpsorlocalhostbecause service workers require a Secure Context. This is determined by the value ofwindow.isSecureContext.

The architecture

For this article we'll create a relatively simple application with the following architecture:

- An Express application server that has the following two purposes:*

- Act as a REST API server that will return data

- Act as an HTTP server to display the web app itself*

- Have a single entry point to the application

- Have a single JS file to retrieve and display data from the API

The API & HTTP server

Let's start with the most straightforward item. The REST API and the HTTP server. There's nothing extraordinary for this setup:

const express = require('express');

const app = express();

const news = require('./data').news;

app.get('/api/news', (req, res) => res.status(200).json(news));

app.use(express.static(`${__dirname}/build`));

const server = app.listen(8081, () => {

const host = server.address().address;

const port = server.address().port;

console.log(`App listening at http://${host}:${port}`);

});The news variable that we create is just an array of objects containing news items, each having a title, slug, image and added property:

const currentDateTime = new Date();

const news = [

{

title: 'Roma beats Torino',

slug:

'Edin Dzeko scored a late stunning goal to snatch a 1-0 win for Roma at Torino in their opening Serie A game on Sunday.',

image: 'https://res.cloudinary.com/tamas-demo/image/upload/pwa/dzeko.jpg',

added: new Date(

currentDateTime.getFullYear(),

currentDateTime.getMonth(),

currentDateTime.getDate(),

currentDateTime.getHours() - 1,

currentDateTime.getMinutes() - Math.floor(Math.random() * 4 + 1),

0

),

},

{

// ... etc

},

];

module.exports = {

news,

};Notice that in the

imageproperty above, we are referencing an image asset previously uploaded to Cloudinary.

The application

Let's take a look at the application's code itself now. We start by constructing a simple HTML page with some CSS as well:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<title></title>

<meta name="description" content="" />

<meta name="author" content="Tamas Piros" />

<link rel="stylesheet" href="bootstrap.css" />

<link rel="stylesheet" href="app.css" />

<script src="app.js"></script>

</head>

<body>

<h1>Sports Time - Your favourite online sports magazine</h1>

<div class="container">

<div class="row">

<div class="card-deck"></div>

</div>

</div>

</body>

</html>.container {

padding: 1px;

}

body {

background-color: rgba(255, 255, 255, 0);

}

html {

background: url('https://res.cloudinary.com/tamas-demo/image/upload/pwa/background.jpg')

no-repeat center center fixed;

-webkit-background-size: cover;

-moz-background-size: cover;

-o-background-size: cover;

background-size: cover;

}

h1 {

background: yellow;

}In the HTML code sample above we are referencing app.js. That file is responsible for two things: first, it registers a service worker (we'll take a look at that in a moment); second, it uses the Fetch API to retrieve data from the API that we created as well as inserts the appropriate elements to the HTML:

function generateCard(title, slug, added, img) {

// generates & constructs the card's HTML

}

fetch('/api/news')

.then((response) => response.json())

.then((news) => {

console.log(news);

news.forEach((n) => {

const today = new Date();

const added = new Date(n.added);

const difference = parseInt((today - added) / (1000 * 3600));

const card = generateCard(n.title, n.slug, difference, n.image);

const cardDeck = document.querySelector('.card-deck');

cardDeck.appendChild(card);

});

});Let's serve this application to see how things look at the moment.

Please note that you may need to edit your Express application server to serve the files from the right location via

app.use(express.static(${__dirname}/build));. Thebuildfolder referenced here is something that we'll be using later on.

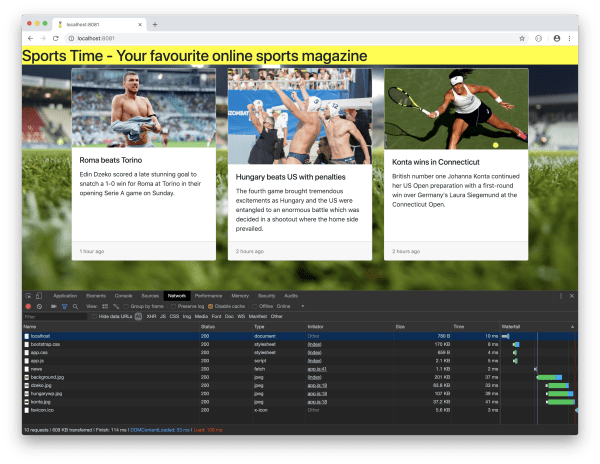

Let's examine this a little bit. There are ten requests, 609 KB transferred that took 114 milliseconds - these are values registered on my machine, your results may be different. These ten requests are a combination of requests retrieving images, as well as fetching the data from the API.

Bringing the application offline will now present the infamous "downasaur".

I recommend using the Chrome web browser for testing purposes. Launch Dev Tools, find the 'Network' tab where should be a checkbox which would bring the application offline.

At this moment we have an application, but it's not a PWA by any means since it doesn't work when the application is offline. There are certain performance improvements as well that we could make to make the application load faster and with requesting fewer data. Let's take a look at these.

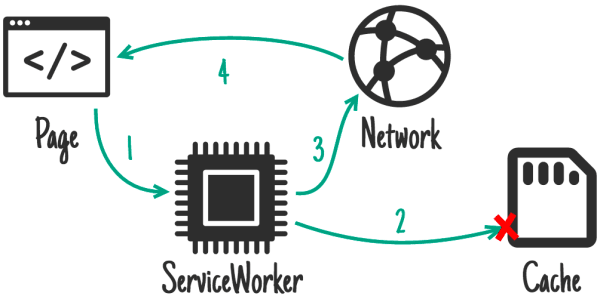

Service Workers

Very simply put, we can imagine the service worker as a proxy between the browser and the application. Service Workers are written using JavaScript, and they are capable of intercepting the application's normal request/response cycle. Let's register a service worker:

// app.js

if ('serviceWorker' in navigator) {

window.addEventListener('load', () => {

navigator.serviceWorker

.register('/sw.js')

.then((registration) => {

console.log(`Service Worker registered! Scope: ${registration.scope}`);

})

.catch((err) => {

console.log(`Service Worker registration failed: ${err}`);

});

});

}Once this is done, we need to come up with the functionality for the Service Worker. The above code references a file sw.js, which is the file containing the Service Worker code.

Workbox

Workbox is a set of JavaScript libraries created by Google that allows developers to add offline support to web applications.

Workbox comes with a nice set of boilerplates that makes life easier when it comes to working with Service Workers. By default, Workbox can do precaching, request routing and utilise various offline strategies.

You may have used or heard of

sw-precacheandsw-toolbox. You can think of Workbox as a combined and enhanced version of these two tools.

Strategies

Let's do a quick demonstration of why working with Workbox will help us down the road. Often when creating offline-first applications, a caching strategy used whereby if a resource is available in the cache, it's returned from there, if it's not then a request is made to the network.

Typically the code would look something like this:

self.addEventListener('fetch', (event) => {

event.respondWith(

caches.match(event.request).then((response) => {

return response || fetch(event.request);

})

);

});Now imagine that we wanted to do the above strategy only for images. The code gradually gets more complex.

With Workbox all we need is the following boilerplate code:

workbox.routing.registerRoute(

new RegExp('/images/'),

workbox.strategies.cacheFirst()

);The above matches are requests for the regular expression provided and applies the cache first strategy. Lot simpler, and straightforward. Of course, the cacheFirst() method can accept an object with some options to further customise the caching strategy.

So now we have established that Workbox gives us some boilerplate code to work with Service Workers. The question is, how do we use Workbox?

The Service Worker file

Let's create our Service Worker (sw.js) and add the following line right at the beginning:

importScripts(

'https://storage.googleapis.com/workbox-cdn/releases/3.6.1/workbox-sw.js'

);

if (workbox) {

console.log('Workbox is loaded');

} else {

console.log('Could not load Workbox');

}

importScripts()is a method that we can use within the Service Worker to synchronously load one or more scripts that we intend to use within the Service Worker.

Refresh the application and hopefully the Workbox is loaded message appears in the Developer Tool's console.

Precaching

The next step that we'll do is to pre-cache some static assets. By static assets, we mean things like index.html and the css and js file that make up the application.

Workbox can help us out by doing what is called pre-caching. Pre-caching essentially means that static assets (files) are downloaded and cached before a Service Worker is installed. This pretty much means that when a service worker is installed the files are going to be already cached.

Our application is relatively small, but even now it'd be a daunting task to list assets for precaching manually. Luckily Workbox can do this automatically with us only needing to provide some patterns. There are three ways to do this, via the CLI, via Gulp or Webpack. We'll be using Gulp to generate a list of static assets.

Since we are going to be using Gulp it'd make sense to not only do workbox related tasks but create a build of our application and place it into a separate folder. Let's set all of this up:

// Gulpfile.js

const gulp = require('gulp');

const del = require('del');

const workboxBuild = require('workbox-build');

const clean = () => del(['build/*'], { dot: true });

gulp.task('clean', clean);

const copy = () => gulp.src(['app/**/*']).pipe(gulp.dest('build'));

gulp.task('copy', copy);

const serviceWorker = () => {

return workboxBuild.injectManifest({

swSrc: 'app/sw.js',

swDest: 'build/sw.js',

globDirectory: 'build',

globPatterns: ['*.css', '*.css.map', 'index.html', 'app.js'],

});

};

gulp.task('service-worker', serviceWorker);

const build = gulp.series('clean', 'copy', 'service-worker');

gulp.task('build', build);

const watch = () => gulp.watch('app/**/*', build);

gulp.task('watch', watch);

gulp.task('default', build);The above is very self-explanatory. Clean the dist folder, copy all files over from the working folder (app) and inject the static assets.

You are probably wondering, how does Workbox know where to inject these assets? Good question! We need to tell it, and the place we need to tell it is in sw.js:

workbox.precaching.precacheAndRoute([]);Let's execute the gulp task and see its result - open up dist/sw.js and hopefully a list of assets are visible in the file:

workbox.precaching.precacheAndRoute([

{

url: 'app.css',

revision: 'aaa3faaa6a5432c4d3c74c61368807ba',

},

// other assets ...

]);The revision that we see tells Workbox whether an asset has changed and if it needs to re-cache it or not.

Let's run our application to see what's going on. Make sure that the browser console is also open, to see some of Workbox's logging listing the preached URLs.

Workbox stores the mapping between the assets and their revisions and full URLs in IndexedDB. Explore your IndexedDB to see this in action. To learn more about Workbox's precaching refer to this article.

Now since we have some assets in the cache, let's try the application offline. What we see is the <h1> element only. Well, that's at least something. Every other thing fails because we depend on some JavaScript to build the cards that display the latest news, and that piece of code, in turn, grabs data from the API. But the API is not accessible as denoted by the red error in the console.

An offline API

So far so good but we need to add a lot more to achieve an application that functions offline as well. What's missing is the data from the API. Think about it this way: if we have a network connection, we can go to the API and retrieve some data, but if we are offline we can't access the API at all. We need a mechanism in place to store the data returned from the API somewhere and to be able to access it when there's no active network connection.

Workbox will come to the rescue. Remember the caching strategies that we discussed earlier? Let's actually use one of them:

workbox.routing.registerRoute(

'/api/news',

workbox.strategies.staleWhileRevalidate({

cacheName: 'api-cache',

})

);In the code above we capture requests going to /api/news and we apply the staleWhileRevalidate caching mechanism to it. And we also pass in an option to give the cache a name.

So what does staleWhileRevalidate do? If there's cached data, return that, but also fetch for an update and cache the response. So the next time a more up-to-date data will be returned.

Let's rerun gulp, refresh the application and see the result in action (don't also forget to bring the application offline).

Because we have an active service worker, we need to make sure that it's no longer registered. There are several ways to doing this, the simplest is to select the 'Applications' tab in Chrome's DevTools and find 'Clear storage' - and find the button 'Clear site data'. This will remove active service workers, data from the cache amongst other things.

Awesome, we now see the data even when the application is offline. But what we don't see are the image assets. This kind of makes sense since those are stored in Cloudinary, and we need to retrieve them via an HTTP call.

Caching images

We can also cache images and make them available when the application is offline. We'll do this via the cache first strategy that we have discussed at the beginning of this article.

workbox.routing.registerRoute(

new RegExp('^https://res.cloudinary.com'),

workbox.strategies.cacheFirst({

cacheName: 'cloudinary-images',

plugins: [

cloudinaryPlugin,

new workbox.expiration.Plugin({

maxEntries: 50,

purgeOnQuotaError: true,

}),

],

})

);The above code matches all requests that are made to Cloudinary via a regular expression. We are also creating a separate plugin for Workbox to handle images stored in Cloudinary. As you can see we can also add further options to the caching strategy, not just the name, but also things like how many entries we allow in the cache (maxEntries), and whether the cache can be deleted if the web application exceeds the available storage (purgeOnQuotaError).

Learn more about Workbox Strategies.

So what does the cloudinaryPlugin do exactly? We'll check whether the request is, in fact, an image and then apply URL manipulation to the served image from Cloudinary. (Note that the request is intercepted, so no image is served until the plugin has finished its job) Once the final URL is ready, we send the request through but with the modified URL. The code below represents what we discussed:

const cloudinaryPlugin = {

requestWillFetch: async ({ request }) => {

if (/\.jpg$|.png$|.gif$|.webp$/.test(request.url)) {

let url = request.url.split('/');

let newPart;

let format = 'f_auto';

switch (

navigator && navigator.connection

? navigator.connection.effectiveType

: ''

) {

case '4g':

newPart = 'q_auto:good';

break;

case '3g':

newPart = 'q_auto:eco';

break;

case '2g':

case 'slow-2g':

newPart = 'q_auto:low';

break;

default:

newPart = 'q_auto:good';

break;

}

url.splice(url.length - 2, 0, `${newPart},${format}`);

const finalUrl = url.join('/');

const newUrl = new URL(finalUrl);

return new Request(newUrl.href, { headers: request.headers });

}

},

};The above code is discussed in detail in the article Adaptive image loading based on network speed

Time for a final test. Let's run gulp again, refresh the application and see what happens when the application is taken offline.

We now have an application that works offline - it's capable of loading data from an API as well as loading images, and it also applies manipulation to the images which in turn means that the entire site loads faster. Earlier we saw that we had ten requests, totalling to 609 KB. Now we have 15 requests that load 164 KB of assets. (The increase in the number of requests is due to the service worker).

Also, check what happens when you load the application on a 3G connection. Select the 'Slow 3G' option from the DevTools under the Network tab. The total number of assets weighted is only 6.7 KB!

Conclusion

In this article, we have reviewed how to create an application using Workbox.js that allows us to cache responses for a REST API as well as store images in the browser's cache. We also had a look at how to store optmised images by creating a Cloudinary Workbox plugin.