Creating a Realtime PWA Using Angular and Firebase (Part 1)

In a series of articles, we'll discuss how to create a real-time, progressive web application using Angular and Firebase.

Access the repository

You can access the code discussed in this repository on GitHub.

If you are new to, or simply interested in Progressive Web Applications I am glad to let you know that I have self published a book discussing them and you can purchase it online.

Getting started

To get started first make sure that you have the Angular CLI installed on your system: npm i -g @angular/cli and that you have created an Angular app: ng new [project-name] --routing.

Building Out the App

First things first, let's build the application and let's not worry about offline capabilities or any other PWA feature - we will add those in later.

The application will have two routes, which will load two components. One component is going to be responsible for displaying a feed of photos while the other one will allow users to take pictures. In light of this, let's go ahead and create the two components: ng g c feed && ng g c capture.

For the routing edit app-routing.module.ts:

const routes: Routes = [

{ path: '', component: FeedComponent },

{ path: 'capture', component: CaptureComponent },

];That's it for the routing.

Styling

Any style and CSS framework would be an excellent fit for our app but let's go with Material design, which we can add by executing ng add @angular/material.

Please read these articles for additional information on Material design as well as Boostrap in an Angular context.

We will be using 3 modules from the Material library, so let's collect those and add them to app.module.ts:

const MatModules = [

MatButtonModule,

MatCardModule,

MatSnackBarModule

];

@NgModule({

// removed for brevity

imports: [

...MatModules,

],

})Let's implement the routing in app.component.html by adding the following HTML structure:

<div class="navigation">

<button mat-raised-button color="primary" routerLink="/">Feed</button>

<button mat-raised-button color="primary" routerLink="/capture">

Capture

</button>

</div>

<div class="main-div">

<router-outlet></router-outlet>

</div>This will add two buttons that will load the different components.

At this point we are in a good state, we have a very basic application but now we need to add the two main features: the option to take a picture and to list them.

Let's talk about the former - the capability to take a picture via the application. Open capture.component.html and add the following HTML code:

<mat-card class="z-depth center" flex="50">

<mat-card-header>

<mat-card-title

>Smile - and take a selfie

<span></span>📱</mat-card-title

>

</mat-card-header>

<video

#video

id="video"

mat-card-image

autoplay

*ngIf="displayStream"

></video>

<canvas

#canvas

id="canvas"

mat-card-image

[width]="width"

[height]="height"

[hidden]="displayStream"

></canvas>

<mat-card-content>

<p>Snap away!</p>

</mat-card-content>

<mat-card-actions *ngIf="actions">

<button

mat-raised-button

color="primary"

(click)="capture()"

*ngIf="displayStream"

>

Capture Photo

</button>

<button

mat-raised-button

color="warn"

(click)="retakePhoto()"

*ngIf="!displayStream"

>

Retake Photo

</button>

<button

mat-raised-button

color="accent"

(click)="usePhoto()"

*ngIf="online && !displayStream"

>

Use photo

</button>

</mat-card-actions>

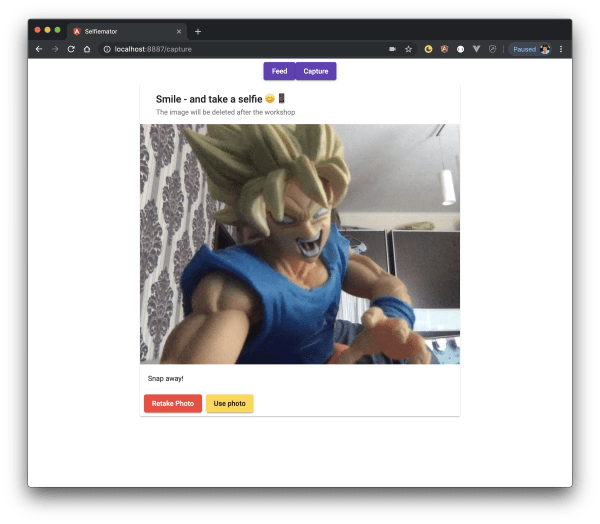

</mat-card>The above displays a <canvas> as well as a few buttons based on some conditions via ngIf. Think about it this way: we will show a direct stream from the camera (once we get consent from the user of course; the prompt is displayed automatically by the browser.) We also provide a button for our users to capture a photo. Once that photo is taken we give them two options: either retake it or to use it and upload it.

We'll take a look at the TypeScript code behind the component as well in a moment but let's discuss photo management first.

Managing photos

As mentioned in the title, we are using Firebase to add real-time features to the application, and Firebase is also capable of storing binary data (images) since it has a cloud storage feature. This would work, however, if we'd like to do additional things with the uploaded images - such as apply transformations, do further optimisations then we'd need to look for another tool. Our choice is going to be Cloudinary - they allow us to store the images plus they offer great optimisation and transformation features.

Setting up Cloudinary

Luckily for us, Cloudinary also has an SDK for Angular, so we can use that pretty easily: npm install cloudinary-core @cloudinary/angular-5.x.

In app.module.ts let's add the following:

import { CloudinaryModule } from '@cloudinary/angular-5.x';

import { Cloudinary } from 'cloudinary-core';

export const cloudinaryLib = {

Cloudinary: Cloudinary

};

@NgModule({

// removed for brevity

imports: [

CloudinaryModule.forRoot(cloudinaryLib, { cloud_name: environment.cloudName, secure: true }),

})Notice two things here - first there's secure: true - this is just a global setting so that Cloudinary will always serve images via HTTPS for us, and second, we are using the cloud_name - that's your Cloudinary account name, which you get after you register. It makes sense to place this piece of information to the enviroment.ts file:

export const environment = {

production: false,

cloudName: '',

uploadPreset: '',

};Cloudinary Fundamentals

There are some basics that we need to get out of the way with regards to Cloudinary. Whenever we upload an image we get to control how the name looks like - it can be filename.jpg (in which case, we'll end up overwriting the same image if we don't programmatically change its name). Cloudinary can also append a unique string to the filename to avoid collisions, like so: filename_abc123.jpg. And the last option, the option that we'll pick, is to have a completely random name: abc123.jpg. In Cloudinary's SDKs, the identifier for a photo (regardless whether it's unique or not) is called a public ID. This is going to be the string that we save in our database.

Also, notice that we have something in the environment settings called uploadPreset. Since for uploaded images, we'll be using an API call; this is considered to be an "unsigned" upload. For unsigned uploads, we need to create an upload preset.

Upload presets allow you to define the default behaviour for your uploads, instead of receiving these as parameters during the upload request itself. Parameters can include tags, incoming or on-demand transformations, notification URL, and more. Upload presets have precedence over client-side upload parameters.

Signed uploads are done from the server-side by using an API key and secret, but we should never expose these in a client, and henceforth we need to utilise an unsigned upload, which requires an upload preset to be created at all times.

Upload Preset

To create an upload preset, login to your Cloudinary account, and hit the cogwheel to access your account settings, then select the 'upload' tab and scroll down to the 'Upload presets' section and click on the 'Add upload preset' link.

The only thing that we need to change here is the 'Signing Mode' - change that to be 'unsigned' and hit 'save' on the top of the page. Make a note of the upload preset name and add that to the previously mentioned TypeScript file.

Furthermore, every uploaded image (and any other asset, such as video for example) are accessible via a URL, such as https://res.cloudinary.com/your-cloud-name/image/upload/public_id.extension

Adding optimisation and manipulation to images is something that we'll discuss later.

Firebase

Since the overall functionality of the app is going to be real-time driven, it's quintessential to add a service that is capable of delivering this functionality. As mentioned before, we'll have a feed that is going to display the pictures taken by other users. We could use any database to store that data; however, with Firebase, we will get a feed that gets updated real-time, without the users having to refresh the feed.

Setting Up Firebase

To get started with Firebase, consult the documentation. You will need a Google account so please go ahead and sign in with that. Once you are signed in, create a Firebase project and register your application. For more information on these steps, consult the Add Firebase to your JavaScript project page from Firebase.

Data structure

Once we have a project created with Firebase, we need to think a little bit about the data structure. We'll store documents in a collection called 'captures' and they'll have the following structure:

{

"public_id": "Cloudinary Public ID",

"uploaded": "timestamp"

}At this point you may be wondering why we are only storing the Public ID as opposed to the entire URL. The reason is that we'll be using the Angular SDK that cloudinary provides and in there, we can pass in the public ID along with some other options, and the URL is automagically going to be generated for us. There's no need to store the entire URL; in fact, storing it would cause us more headache down the line.

Adding Firebase to our App

To have the right functionality in our application, we need to install the official Angular library for Firebase via npm install firebase @angular/fire.

Once this is done, we can log in to our Firebase console and get necessary pieces of information (like the API key) to get connected to our Firebase instance. Once you have these details, add them to environment.ts:

export const environment = {

production: false,

cloudName: '',

uploadPreset: '',

firebase: {

apiKey: '',

authDomain: '',

databaseURL: '',

projectId: '',

storageBucket: '',

messagingSenderId: '',

},

};Once this is done, we can open app.module.ts and setup Firebase:

import { AngularFireModule } from '@angular/fire';

import { AngularFirestoreModule } from '@angular/fire/firestore';

// ...

@NgModule({

// removed for brevity

imports: [

AngularFireModule.initializeApp(environment.firebase),

AngularFirestoreModule

],

})Now that we have these in place let's take a look at capture.component.ts and discuss the logic behind the component that will be responsible for snapping the picture and uploading it to Cloudinary.

The good news is that Cloudinary also has a promise-based API which we can directly use. So the flow is going to be the following: a user takes a picture, selects to use the picture, which is then going to be uploaded to Cloudinary. Once it's uploaded an entry is also added to Firebase in the form of the data structure discussed before. Let's break this down into sections.

Displaying the video stream

To display the feed we'll be using a canvas (as seen in the HTML code above) and we'll be using navigator.mediaDevices.getUserMedia():

import { Component, OnInit, ViewChild, ElementRef, OnDestroy } from '@angular/core';

import { HttpClient } from '@angular/common/http';

import { environment } from '../../environments/environment';

import { AngularFirestore, AngularFirestoreCollection } from '@angular/fire/firestore';

import { Router } from '@angular/router';

@ViewChild('video')

video: ElementRef;

@ViewChild('canvas')

canvas: ElementRef;

private constraints = {

video: true,

};

displayStream: boolean;

captureCollectionRef: AngularFirestoreCollection<any>;

width: number;

height: number;This is how the constructor looks like for the component:

constructor(

private http: HttpClient,

private db: AngularFirestore,

private router: Router) {

this.displayStream = true;

this.captureCollectionRef = this.db.collection<any>('captures');

}And last but not least, this is how ngOnInit() looks like - this is where we access the media stream and add it to ...

ngOnInit() {

if (navigator.mediaDevices && navigator.mediaDevices.getUserMedia) {

navigator.mediaDevices.getUserMedia(this.constraints).then(stream => {

this.video.nativeElement.srcObject = stream;

this.video.nativeElement.play();

this.video.nativeElement.addEventListener('playing', () => {

const { offsetWidth, offsetHeight } = this.video.nativeElement;

this.width = offsetWidth;

this.height = offsetHeight;

this.actions = true;

});

});

}

}Capturing an image is added via a different function:

public capture() {

this.displayStream = false;

this.canvas.nativeElement.getContext('2d').drawImage(this.video.nativeElement, 0, 0, this.width, this.height);

this.video.nativeElement.srcObject.getVideoTracks().forEach(track => track.stop());

}Astute readers may have noticed the

displayStreamclass member. It exists to show/hide the camera feed. There's no need to show it when a picture has been taken, and it conditionally can be used to show/hide the stream programmatically.

The last bit of functionality that we'll highlight from this component is the upload. We take the image currently on the canvas (i.e. the photo that we just made), and upload it to cloudinary using an HTTP post method via https://api.cloudinary.com/v1_1/cloud_name/image/upload. Notice how Cloudinary accepts images to be uploaded in a data-url format as well.

public usePhoto() {

const capture = this.canvas.nativeElement.toDataURL('image/jpeg');

const timeTaken = new Date().getTime();

this.http.post(`https://api.cloudinary.com/v1_1/${environment.cloudName}/image/upload`, {

file: capture,

upload_preset: environment.uploadPreset

}).subscribe((response: any) => {

if (response) {

this.captureCollectionRef.add({

public_id: response.public_id,

uploaded: timeTaken

}).then(() => {

this.router.navigateByUrl('/');

});

}

});

}At this point, we have the complete "take picture and upload it" functionality ready.

Of course, we can rarely snap the desired picture, and it looks great for the first time; therefore, we need to implement a functionality to allow users to retake a photo if they wish. From a code perspective, this is relatively straight forward. All we need to do is to show the video stream again and display the control buttons again to the user:

public retakePhoto() {

this.displayStream = true;

this.actions = false;

if (navigator.mediaDevices && navigator.mediaDevices.getUserMedia) {

navigator.mediaDevices.getUserMedia(this.constraints).then(stream => {

this.video.nativeElement.srcObject = stream;

this.video.nativeElement.play();

this.video.nativeElement.addEventListener('playing', () => {

const { offsetWidth, offsetHeight } = this.video.nativeElement;

this.width = offsetWidth;

this.height = offsetHeight;

this.actions = true;

});

});

}

}Last but not least, we also need to make sure that if someone navigates away from this component, we remove the video stream. We can do this by accessing this.video.nativeElment again, find all the video tracks and stop them. Since Angular provides us with a component hook called ngOnDestroy we can leverage it to execute the previously mentioned logic:

ngOnDestroy() {

this.actions = false;

if (this.video) {

this.video.nativeElement.srcObject.getVideoTracks().forEach(track => track.stop());

}

}This is how the "capture" component looks like:

Displaying the feed

The next piece of functionality that we'll take a look at is displaying the feed. So far, we have discussed how to create an image, upload it to Cloudinary and store some data about it in Firebase. Now it's time to query that data and display it.

Let's open feed.component.html and add the following code:

<h1>Selfie feed <span>📱 \uD83D\uDD25 \uD83D\uDE0E</span></h1>

<mat-card

class="spaced z-depth center"

flex="50"

*ngFor="let capture of captures$ | async"

>

<cl-image public-id="" mat-card-image>

<cl-transformation

width="450"

height="450"

gravity="faces"

radius="max"

crop="thumb"

border="4px_solid_rgb:000"

effect="improve"

></cl-transformation>

<cl-transformation effect="sepia:90"></cl-transformation>

</cl-image>

<mat-card-content>

<p>Uploaded at </p>

</mat-card-content>

<mat-card-footer></mat-card-footer>

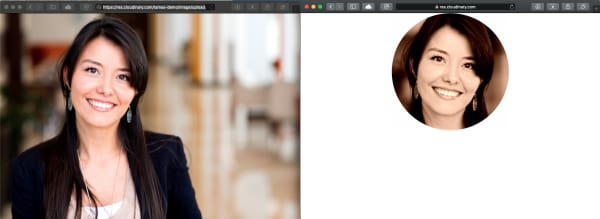

</mat-card>Notice the <cl-image> element. That's the Cloudinary Angular SDK in action and notice the public-id attribute. Using this attribute, we can render the right image, and by using <cl-transformation>, we can add transformations to the image. Let's review the transformations that we do:

- We set the width and the height of the image to be 450 pixels

- We crop the image to be a 'thumbnail.'

- We add a radius around the image with a value of 'max' (this will create a circle image)

- We apply gravity faces*

- We add a sepia effect to the image

*You're probably wondering what gravity face(s) exactly means. Cloudinary has an exciting feature where they can find interesting parts of the image and "zoom" or "put the focus" of the image to that point. Specifying 'face' as a parameter to 'gravity' really means that we want to find a face on the image and put that in the centre. Cloudinary refers to this as smart and content-aware image cropping.

For reference here's a screenshot of two images - the one on the left is the original image, and the one on the right has all the previously mentioned optimisations & transformations applied to it.

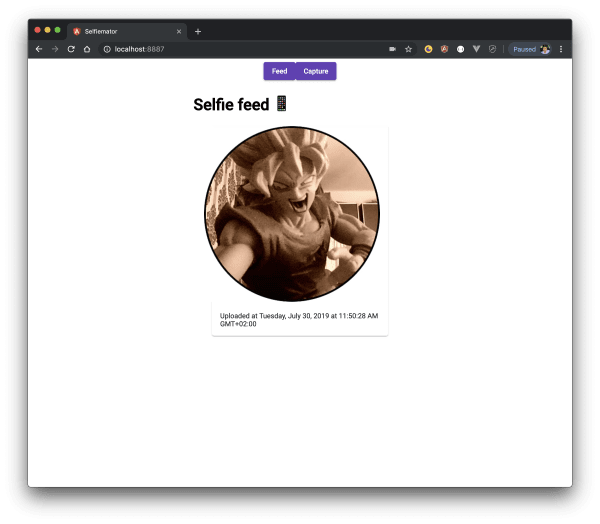

Now that we have this knowledge let's take a look at the component's logic. All we need to do is to display the images that users took. All the images get stored in Firebase, and they have two values: a public ID and an uploaded time. This means that we can query our dataset and return the data in a descending order based on the upload time value and we can also extract the public ID and feed that to the Cloudinary Angular SDK to display the images:

import { AngularFirestore } from '@angular/fire/firestore';

import { Observable } from 'rxjs';

// ...

export class FeedComponent {

captures$: Observable<any>;

online: boolean;

constructor(db: AngularFirestore) {

const ref = db.collection('captures', (ref) =>

ref.orderBy('uploaded', 'desc')

);

this.captures$ = ref.valueChanges();

}

}Believe it or not, this is all the code that we need to achieve what we wanted. Take note of this.captures$ = ref.valueChanges(); which is an observable. Whenever a new value gets added to Firebase, captures$ gets updated and it renders the new image to the screen.

This is how the "feed" component looks like (notice the applied effects and transformations as well):

End of part one

In this article, we have created an application that uses Angular, Firebase and Cloudinary to display images taken with the built-in camera of a laptop or mobile device. At this point, the app itself is not a PWA because we cannot bring the application offline, nor it is installable. There's still more work to be done, but we'll take a look at that in the next article.