MCP Servers - The Bridge Between LLMs and Real-World Tools

If you’ve played around with large language models (LLMs) long enough, you’ll know that making them “do stuff” in the real world like querying APIs, triggering workflows, or working with files has been a mess of fragile glue code and bespoke wrappers.

Model Context Protocol (MCP) is one of the most promising efforts to fix that.

It provides a structured, tool-agnostic way for language models to interact with external tools and resources. And at the heart of it all sits the MCP server, the part of your application that exposes tools and context to the LLM.

In this post, we’ll dive deep into what the MCP server does, how it works, and how you can build your own.

What is an MCP Server?

An MCP server is a standalone process that acts as a context provider for LLMs. It exposes three types of functionality:

- Tools – callable functions (like

getWeather,searchDocs,sendEmail) - Resources – File-like data that can be read by clients (like API responses or file contents)

- Prompts – reusable natural language prompt templates that help users to achieve tasks

It communicates with the LLM runtime (client) using JSON-RPC, either over stdio, HTTP(S), or Server-Sent Events (SSE). This allows the LLM to discover what capabilities are available, invoke them with structured arguments and receive structured response to carry on the conversation.

A single MCP server can register multiple tools, and those tools can persist state across calls-ideal for orchestrating multi-step workflows.

A Typical Call Flow

Let’s break down the standard lifecycle:

- The MCP client starts and connects to your (MCP) server.

- It sends a

listToolscall to discover what’s available. - Based on the tool list, it chooses one and sends a structured invocation - this is done if the input to the LLM is about a topic that requires a tool call.

- Your server executes the function, then responds with a result.

Anatomy of an MCP Server

At a code level, an MCP server is mostly just:

- A schema definition (tools, resources, prompts)

- A dispatcher that routes incoming method calls

- A set of resolvers or handlers for each tool/resource

Why This Matters?

So why would we bother with all of this? The answer is really simple: the LLM is no longer just a chat interface but it becomes a controller that orchestrates real systems.

With an MCP server, you can expose:

- Your file system for editing or summarising docs

- APIs for customer data, analytics, payments

- Third-party integrations (Slack, Notion, GitHub, Postgres)

- UI-driven flows like form filling or test automation

And because MCP is model-agnostic, it works with anything that understands the protocol-Claude, Gemini, LLaMA, etc.

Sample implementation

Let’s take a look at a sample MPC server which communicates over stdio and defintes multiple tools for querying the Star Wars API. In fact, this is a very classic implementation - a lot of times MCP servers are just wrappers on top of existing APIs. This is the real power behind MCP servers - now these APIs can be consumed easily by any large language model.

Module imports

import { McpServer } from '@modelcontextprotocol/sdk/server/mcp.js';

import { StdioServerTransport } from '@modelcontextprotocol/sdk/server/stdio.js';

import { z } from 'zod';At the top of the file, we import three modules. McpServer is the main class used to define and manage the server. StdioServerTransport provides a transport mechanism for communicating via standard input/output, which is useful for local CLI tools or desktop LLM apps. zod is a schema validation library used here to define structured tool input types.

Defining the API Base URL

const BASE_URL = 'https://swapi-api.hbtn.io/api';The BASE_URL constant stores the root URL of the Star Wars API. This specific endpoint supports CORS and is reliable for public use. All API requests in this server will be built from this base.

Main Server Entry Point

async function main() {

const server = new McpServer({

name: 'swapi-server',

version: '1.0.0',

});We define an asynchronous main() function, which serves as the server’s entry point. Inside it, we create a new instance of McpServer, passing in basic metadata like the server name and version. This metadata helps identify the server to clients.

Helper Function for Resource Fetching

async function fetchFirst(resource: string, queryKey: string, queryVal: string) {

const url = `${BASE_URL}/${resource}/?${queryKey}=${encodeURIComponent(queryVal)}`;

const res = await fetch(url);

const json = await res.json();

const results: any[] = Array.isArray(json.results) ? json.results : [];

return results.length > 0

? results[0]

: { error: `No ${resource.slice(0, -1)} found for "${queryVal}"` };

}The fetchFirst function abstracts the logic of querying SWAPI. It builds a search URL based on a resource type (like people or starships), sends a fetch request, and returns the first result in the response array. If nothing is found, it returns a helpful error object.

Registering the getCharacter Tool

server.registerTool(

'getCharacter',

{

title: 'Get Star Wars Character',

description: 'Fetch a character by name',

inputSchema: {

name: z.string().describe("Character name, e.g. 'Han Solo'"),

},

},

async (args) => {

const data = await fetchFirst('people', 'search', args.name);

return {

content: [{ type: 'text', text: JSON.stringify(data, null, 2) }],

};

},

);Here we register our first tool called getCharacter. It has a title and description that an LLM can use to understand its purpose. The input schema defines a single string field called name, using Zod. When this tool is called, the handler searches the people resource using the input name, then returns the result as a formatted text block.

This part is the key. This function (and all subsequent ones that we’ll be adding) are the tools available to the LLM. They are registered with the server using the registerTool method. You can think of these as the actual wrappers around the API - and we have now exposed the functionality to the LLM.

Registering Other Similar Tools

The same pattern is followed for other Star Wars data types. Each tool has a similar input schema and handler that calls fetchFirst. Remember, the input schema at all times specifies what arguments should be used with this tool (these are a 1:1 mapping to what parameters we would be sending to the API itself).

server.registerTool(

'getStarship',

{

title: 'Get Star Wars Starship',

description: 'Fetch a starship by name',

inputSchema: {

name: z.string().describe("Starship name, e.g. 'Millennium Falcon'"),

},

},

async (args) => {

const data = await fetchFirst('starships', 'search', args.name);

return {

content: [{ type: 'text', text: JSON.stringify(data, null, 2) }],

};

},

);

server.registerTool(

'getPlanet',

{

title: 'Get Star Wars Planet',

description: 'Fetch a planet by name',

inputSchema: {

name: z.string().describe("Planet name, e.g. 'Tatooine'"),

},

},

async (args) => {

const data = await fetchFirst('planets', 'search', args.name);

return {

content: [{ type: 'text', text: JSON.stringify(data, null, 2) }],

};

},

);

server.registerTool(

'getFilm',

{

title: 'Get Star Wars Film',

description: 'Fetch a film by title',

inputSchema: {

title: z.string().describe("Film title, e.g. 'A New Hope'"),

},

},

async (args) => {

const data = await fetchFirst('films', 'search', args.title);

return {

content: [{ type: 'text', text: JSON.stringify(data, null, 2) }],

};

},

);

server.registerTool(

'getSpecies',

{

title: 'Get Star Wars Species',

description: 'Fetch a species by name',

inputSchema: {

name: z.string().describe("Species name, e.g. 'Wookiee'"),

},

},

async (args) => {

const data = await fetchFirst('species', 'search', args.name);

return { content: [{ type: 'text', text: JSON.stringify(data, null, 2) }] };

},

);

server.registerTool(

'getVehicle',

{

title: 'Get Star Wars Vehicle',

description: 'Fetch a vehicle by name',

inputSchema: {

name: z.string().describe("Vehicle name, e.g. 'Sand Crawler'"),

},

},

async (args) => {

const data = await fetchFirst('vehicles', 'search', args.name);

return { content: [{ type: 'text', text: JSON.stringify(data, null, 2) }] };

},

);

server.registerTool(

'searchResource',

{

title: 'Search SWAPI Resource',

description: 'Generic search by resource type and query',

inputSchema: {

resource: z

.enum(['people', 'starships', 'planets', 'films', 'species', 'vehicles'])

.describe('One of: people, starships, planets, films, species, vehicles'),

query: z.string().describe('Search term'),

},

},

async (args) => {

const { resource, query } = args as { resource: string; query: string };

const url = `${BASE_URL}/${resource}/?search=${encodeURIComponent(query)}`;

const res = await fetch(url);

const json = await res.json();

return {

content: [{ type: 'text', text: JSON.stringify(json.results || [], null, 2) }],

};

},

);Setting Up stdio Transport

const transport = new StdioServerTransport();

await server.connect(transport);

console.error('✅ SWAPI MCP server (full toolkit) running over STDIO');To expose the server to a client, we create a StdioServerTransport and call connect() on the MCP server instance. This sets up the full event loop and listens for incoming tool invocations via standard input.

Running the Server

Last but not least all we need to do is to call the main() function to start the server.

Debugging

In an upcoming article we’ll take a look at how to use the MCP server from an MCP client (which we’ll write ourselves as well) but for now we should be able to run the server locally and test it. Luckily for us there’s an inspector tool that allows us to do just that.

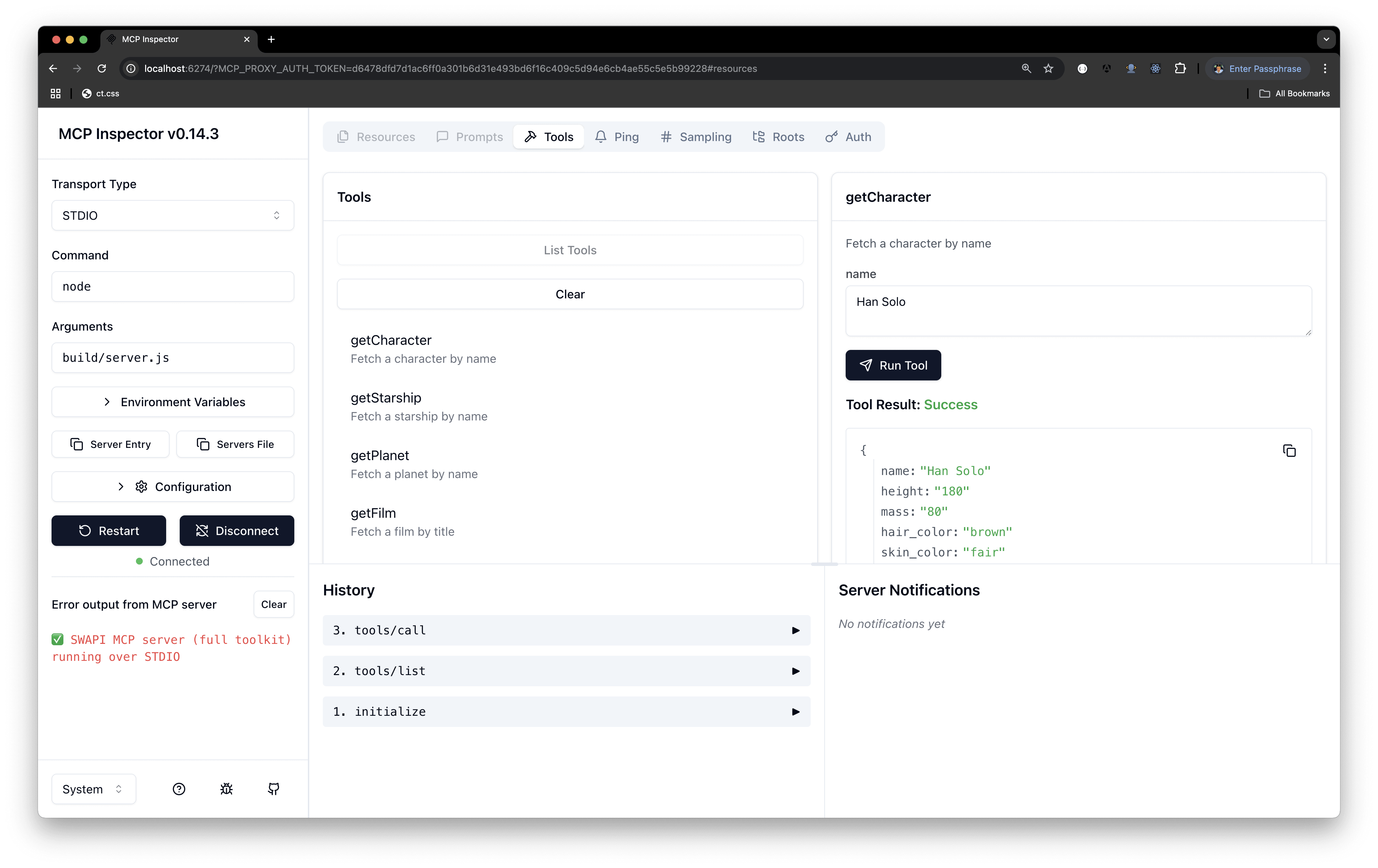

The command that we need to execute is npx @modelcontextprotocol/inspector node build/server.js (make sure that build/server.js exists). Copy the URL returned by this command and paste it into your browser. The result should be something similar to the screenshot below. Notice the ‘list tools’ button - clicking it reveals all of the available tools, which can be tested as well with sample arguments and after running the tool the results can also be observed.

Wrap-up

MCP servers make it much easier to turn language models from passive text generators into active, stateful agents that work with your tools and data. A really powerful aspect of an MCP server is to be able to wrap your existing APIs and potentially SDKs and expose them to the model - like how we have seen with the Star Wars API.